In [1]:

from IPython.core.display import display, HTML

display(HTML("<style> .container{width:100% !important;}</style>"))

In [2]:

import tensorflow as tf

import warnings

import numpy as np

import matplotlib.pyplot as plt

plt.rcParams["figure.figsize"] = (12, 8)

In [3]:

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("./mnist/data/", one_hot=True)

In [4]:

global_step = tf.Variable(0, trainable=False, name="global_step")

X = tf.placeholder(tf.float32, shape=[None, 784], name="X") # None, 784

Y = tf.placeholder(tf.float32, shape=[None, 10], name="Y")

W1 = tf.Variable(tf.random_normal([784, 256], mean=0, stddev=0.01), name="W1")

W2 = tf.Variable(tf.random_normal([256, 256], mean=0, stddev=0.01), name="W2")

W3 = tf.Variable(tf.random_normal([256, 10], mean=0, stddev=0.011), name="W3")

b1 = tf.zeros([256], name="bias1")

b2 = tf.zeros([256], name="bias2")

b3 = tf.zeros([10], name="bias3")

In [5]:

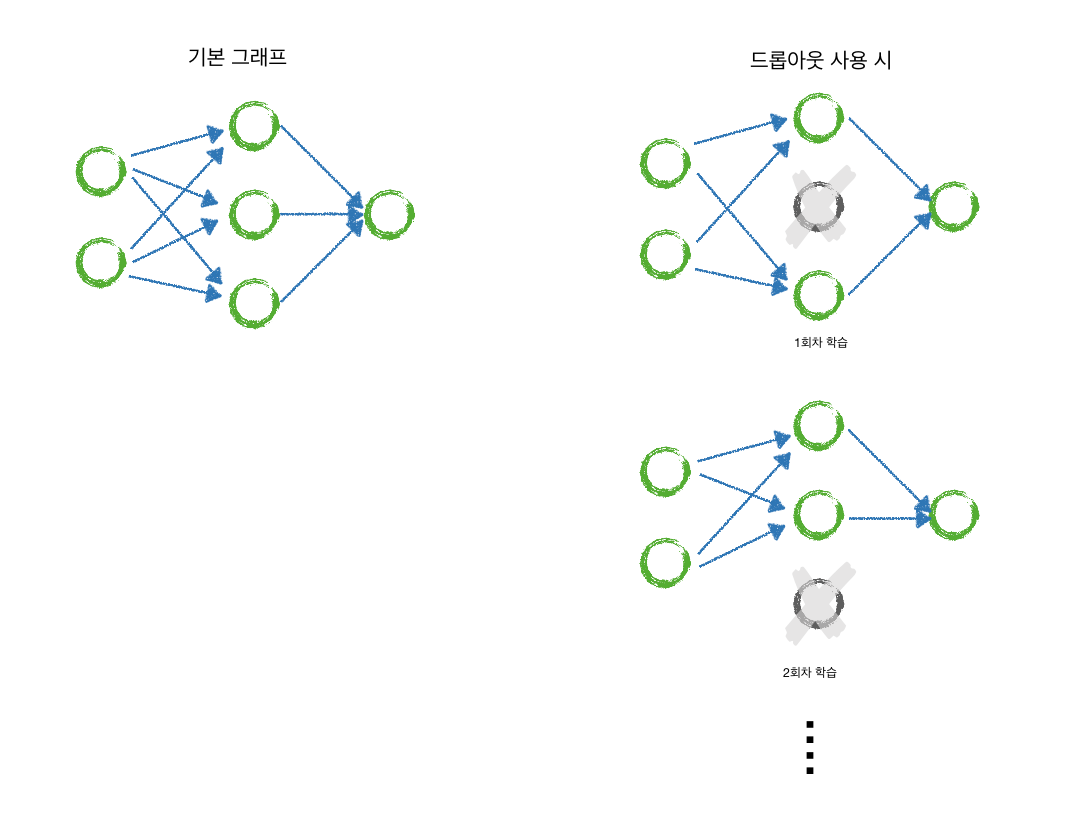

keep_prob = tf.placeholder(tf.float32)

with tf.name_scope("layer1"):

L1 = tf.add(tf.matmul(X, W1), b1)

L1 = tf.nn.relu(L1)

L1 = tf.nn.dropout(L1, keep_prob)

with tf.name_scope("layer2"):

L2 = tf.add(tf.matmul(L1, W2), b2)

L2 = tf.nn.relu(L2)

L2 = tf.nn.dropout(L2, keep_prob)

with tf.name_scope("layer3"):

model = tf.add(tf.matmul(L2, W3), b3)

In [6]:

with tf.name_scope("optimizer"):

cost = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits_v2(labels=Y, logits=model))

opt = tf.train.AdamOptimizer(learning_rate=0.001).minimize(cost, global_step=global_step)

tf.summary.scalar("cost", cost)

In [7]:

init = tf.global_variables_initializer()

sess = tf.Session()

# saver = tf.train.Saver(tf.global_variables())

sess.run(init)

merged = tf.summary.merge_all()

writer = tf.summary.FileWriter("./logs/mnist_dropout", sess.graph)

In [8]:

batch_size = 50

total_batch = int(mnist.train.num_examples/batch_size)

cost_epoch = []

In [9]:

%%time

for epoch in range(30):

total_cost = 0

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

_, cost_val = sess.run([opt, cost], feed_dict={X:batch_xs, Y:batch_ys, keep_prob: 0.8})

total_cost += cost_val

cost_epoch.append(total_cost)

summary = sess.run(merged, feed_dict={X:batch_xs, Y:batch_ys, keep_prob: 0.8})

writer.add_summary(summary, global_step=sess.run(global_step))

print("epoch: {}, Avg.cost: {}".format(epoch+1, total_cost / total_batch))

In [10]:

plt.plot(cost_epoch, "g")

plt.title("cost_epoch")

plt.show()

In [11]:

is_correct = tf.equal(tf.argmax(model, 1), tf.argmax(Y, 1))

accuracy = tf.reduce_mean(tf.cast(is_correct, tf.float32))

print("accuracy: {}".format(sess.run(accuracy, feed_dict={X: mnist.test.images,

Y: mnist.test.labels,

keep_prob: 1})))

In [12]:

### tensorboard graph

from IPython.display import clear_output, Image, display, HTML

def strip_consts(graph_def, max_const_size=32):

"""Strip large constant values from graph_def."""

strip_def = tf.GraphDef()

for n0 in graph_def.node:

n = strip_def.node.add()

n.MergeFrom(n0)

if n.op == 'Const':

tensor = n.attr['value'].tensor

size = len(tensor.tensor_content)

if size > max_const_size:

tensor.tensor_content = "<stripped %d bytes>"%size

return strip_def

def show_graph(graph_def, max_const_size=32):

"""Visualize TensorFlow graph."""

if hasattr(graph_def, 'as_graph_def'):

graph_def = graph_def.as_graph_def()

strip_def = strip_consts(graph_def, max_const_size=max_const_size)

code = """

<script>

function load() {{

document.getElementById("{id}").pbtxt = {data};

}}

</script>

<link rel="import" href="https://tensorboard.appspot.com/tf-graph-basic.build.html" onload=load()>

<div style="height:600px">

<tf-graph-basic id="{id}"></tf-graph-basic>

</div>

""".format(data=repr(str(strip_def)), id='graph'+str(np.random.rand()))

iframe = """

<iframe seamless style="width:1200px;height:620px;border:0" srcdoc="{}"></iframe>

""".format(code.replace('"', '"'))

display(HTML(iframe))

In [13]:

show_graph(tf.get_default_graph().as_graph_def())

In [14]:

# ### tensorboard

# def TB(cleanup=False):

# import webbrowser

# webbrowser.open('http://127.0.0.1:6006')

# !tensorboard --logdir="./logs/mnist_dropout/"

# TB()

'Deep_Learning' 카테고리의 다른 글

| 12.mnist_cnn (0) | 2018.12.12 |

|---|---|

| 11.mnist_matplotlib_dropout_tensorgraph (0) | 2018.12.10 |

| 00.write_csv (0) | 2018.12.09 |

| 09.mnist_01_minibatch (0) | 2018.12.09 |

| 08.tensorboard03_example (0) | 2018.12.09 |